使用到的python库:

1

2

3

4

5

6

| import sys

import numpy as np

import matplotlib.pyplot as plt

from lab_utils_uni import plt_gradients

import copy

import math

|

问题陈述

一个1000平方英尺的房子以300,000美元售出,一个2000平方英尺的房子以500,000美元售出。请给出一个线性回归模型,呈现房子面积与售价的关系。

| 面积/1000平方英尺 |

价格/1000$ |

| 1 |

300 |

| 2 |

500 |

记录数据

1

2

| x_train = np.array([1.0,2.0])

y_train = np.array([300,500])

|

理论依据:

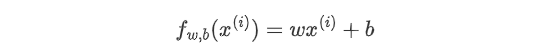

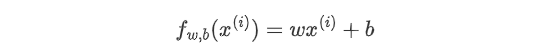

一元线性回归的预测函数为:

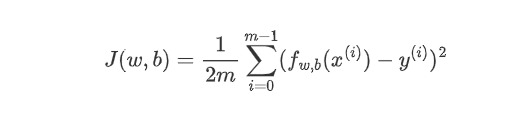

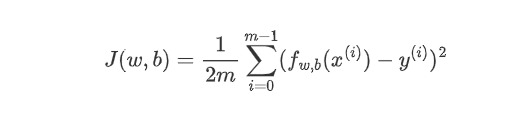

误差函数:

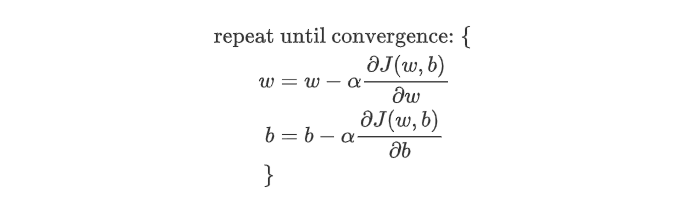

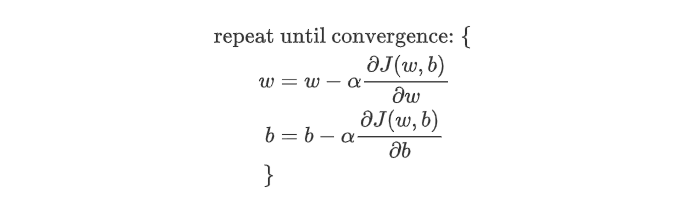

梯度下降算法:

其中,为学习率,决定单次下降的幅度。

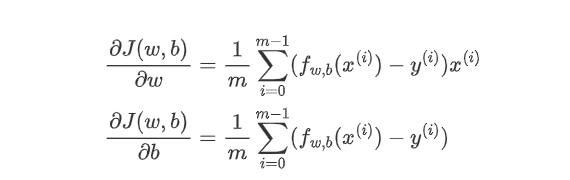

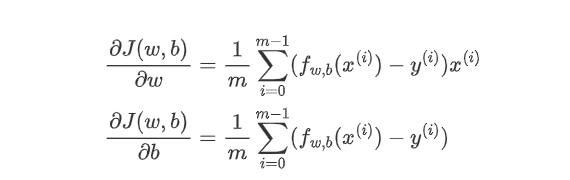

梯度为:

用代码实现:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

|

def compute_cost(x,y,w,b):

m = x.shape[0]

cost = 0

for i in range(m):

f_wb = w*x[i]+b

cost += (f_wb-y[i])**2

total_cost = cost/(m*2)

return total_cost

def compute_gradient(x,y,w,b):

m=x.shape[0]

dj_dw=0

dj_db=0

for i in range(m):

f_wb=w*x[i]+b

dj_dw_i=(f_wb-y[i])*x[i]

dj_db_i=(f_wb-y[i])

dj_dw+=dj_dw_i

dj_db+=dj_db_i

dj_dw=dj_dw/m

dj_db=dj_db/m

return dj_dw,dj_db

def gradient_descent(x,y,w_in,b_in,alpha,num_iters,cost_function,gradient_function):

'''变量声明

x (ndarray (m,)) : 解释变量

y (ndarray (m,)) : 目标变量

w_in,b_in (scalar): 参数w,b的初始值

alpha (float): 学习率

num_iters (int): 梯度下降的次数

cost_function: 计算误差的函数

gradient_function: 计算偏导数的函数

返回值:

w (scalar),b (scalar): 参数w,b的最终值,此时误差值达到局部最小

J_history (List): 对每次梯度下降后的误差值做记录

p_history (list): 对每次梯度下降后的参数w,b做记录

'''

J_history = []

p_history = []

b = b_in

w = w_in

for i in range(num_iters):

dj_dw,dj_db = gradient_function(x,y,w,b)

b = b-alpha*dj_db

w = w-alpha*dj_dw

if i<100000:

J_history.append(cost_function(x,y,w,b))

p_history.append([w,b])

if i%math.ceil(num_iters/10)==0:

print(f"Iteration {i:4} Cost: {J_history[-1]:0.2e}",

f"dj_dw: {dj_dw: 0.3e}, dj_db: {dj_db: 0.3e}",

f"w: {w: 0.3e}, b:{b: 0.5e}")

return w, b, J_history, p_history

|

开始运行,示例:

1

2

3

4

5

6

| w_init = 1

b_init = 2

iterations = 10000

tmp_alpha = 1.0e-2

w_final, b_final, J_hist, p_hist = gradient_descent(x_train ,y_train, w_init, b_init, tmp_alpha, iterations, compute_cost, compute_gradient)

print(f"(w,b) found by gradient descent: ({w_final:8.4f},{b_final:8.4f})")

|

输出结果:

Iteration 0 Cost: 7.79e+04 dj_dw: -6.445e+02, dj_db: -3.965e+02 w: 7.445e+00, b: 5.96500e+00

Iteration 1000 Cost: 3.82e+00 dj_dw: -3.930e-01, dj_db: 6.358e-01 w: 1.946e+02, b: 1.08710e+02

Iteration 2000 Cost: 8.88e-01 dj_dw: -1.894e-01, dj_db: 3.065e-01 w: 1.974e+02, b: 1.04198e+02

Iteration 3000 Cost: 2.06e-01 dj_dw: -9.130e-02, dj_db: 1.477e-01 w: 1.987e+02, b: 1.02024e+02

Iteration 4000 Cost: 4.80e-02 dj_dw: -4.401e-02, dj_db: 7.121e-02 w: 1.994e+02, b: 1.00975e+02

Iteration 5000 Cost: 1.11e-02 dj_dw: -2.121e-02, dj_db: 3.433e-02 w: 1.997e+02, b: 1.00470e+02

Iteration 6000 Cost: 2.59e-03 dj_dw: -1.023e-02, dj_db: 1.655e-02 w: 1.999e+02, b: 1.00227e+02

Iteration 7000 Cost: 6.02e-04 dj_dw: -4.929e-03, dj_db: 7.976e-03 w: 1.999e+02, b: 1.00109e+02

Iteration 8000 Cost: 1.40e-04 dj_dw: -2.376e-03, dj_db: 3.844e-03 w: 2.000e+02, b: 1.00053e+02

Iteration 9000 Cost: 3.25e-05 dj_dw: -1.145e-03, dj_db: 1.853e-03 w: 2.000e+02, b: 1.00025e+02

(w,b) found by gradient descent: (199.9924,100.0122)

注意一下上面打印的梯度下降过程的一些特征。